How I do the hand-drawn diagrams

How I do the hand-drawn diagrams

Whenever I publish a story with a hand-drawn diagram, people ask how I do it.

There's really nothing to it. I draw the diagram and scan it.

To illustrate...

There are no tricks, but the pens really are fantastic. Highly recommended. I've been using them since 5th grade.

A transparent change, but an important one

A transparent change, but an important one

In journalism, politics and business they talk about transparency as a universal virtue. If you disclose your conflicts, or say where your money comes from, or deal with your users openly and fairly -- those are obvious good things.

Transparency is different in software. When systems change you want the changes to be without any apparent effects on users and developers. It's like transparency in recording music. I want all the highs and lows and in the right proportions. I want my software to keep working even if you just rocked the foundations. That's what we aspire to. We hope. ![]()

Anyway, today I made a big change that's virtually impossible to show you because it's so transparent. But I'll try to explain it anyway.

When I write a blog post like the one you're reading now, I write it on a workstation computer. It could be my desktop in my apartment in Manhattan. Or on my laptop, or netbook. I write and save and revise and save, over and over, just as you would edit a word processing document on your desktop, with one important difference. The changed version of the document is saved to a content management system running on another computer, running in Rackspace's cloud. This saving process is done with XML-RPC, although it could just as easily be done with a REST interface or FTP.

From there, the document is passed through the CMS, rendered and transferred to a server running in Amazon's cloud. That server is the machine called scripting.com. This is the machine your browser RSS aggregator talk to, to get the latest stuff from Dave. That transfer was made via FTP and the finished content is accessed via HTTP.

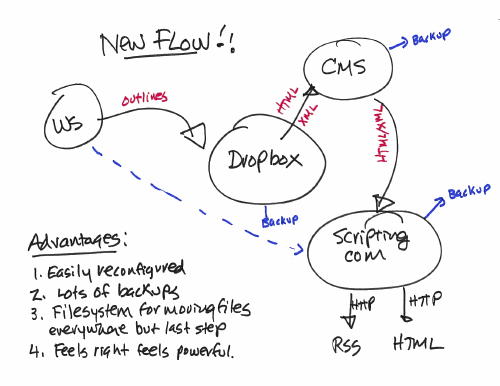

That's the old flow. Here's the new one.

The workstation saves its document to a Dropbox folder. The CMS is watching that folder, sees something new, renders it, and drops it into another Dropbox folder. That folder is served by Apache on the machine running scripting.com. Everything is done using the file system. The software just got a whole lot simpler. And much better backed up. And more flexible, because different machines can easily play the needed roles, or the same content served through scripting.com on one box could be served via egypt.com on another.

In a sense the filesystem has been turned into a simple multi-machine networked queuing system.

This is what I was trying to get working a few days ago. ![]()